In the last 18 months, the landscape of artificial intelligence and robotics has undergone a seismic shift, altering our collective perception of the potential and applicability of these technologies in our daily lives.

At the heart of this transformation was a pivotal moment: the launch of ChatGPT by OpenAI on November 30, 2022. This event propelled Large Language Models (LLMs) from the domain of tech aficionados to the forefront of mainstream consciousness, revealing a future where AI could blend seamlessly into our routines and workflows.

ChatGPT, more than a mere tool, emerged as a beacon of AI’s potential. It showcased an AI’s ability to comprehend, interact, and articulate responses with a level of sophistication that seemed almost fantastical. However, as groundbreaking as ChatGPT’s cognitive capabilities were, they also laid bare a crucial shortfall: while AI could engage in certain complex interactions and single tasks, its proficiency in handling complex, multi-stage tasks remained wanting; unable to self-determine next steps and often when hitting road blocks it would hallucinate the work as working & complete, when it was not. Unable to cognitively determine that the task was actually incomplete or incorrect.

However, the evolution didn’t halt with ChatGPT. In its wake, we’ve witnessed the debut of open-source LLMs from entities such as Mistral, Meta, and TII, alongside advancements in image and video generation technologies.

The trajectory of these developments underscores a rapid pace of innovation, set to redefine digital work through both assistive co-pilot functions and outright automation of certain jobs.

Many fear the advent of Artificial General Intelligence (AGI), but it’s the immediate impact of AI on the workforce and jobs, and our response to it, that should command our attention and concern, well before we face any existential ‘threat of AGI’.

However, despite improvements in contextual memory and Retrieval-Augmented Generation, the capability of AI to autonomously navigate and complete intricate, sequential tasks still presented a challenge.

I always envisioned one day soon we would get there, sometime in the near future, sometime tomorrow… but yesterday, tomorrow was today.

The First Glimpse: Devin

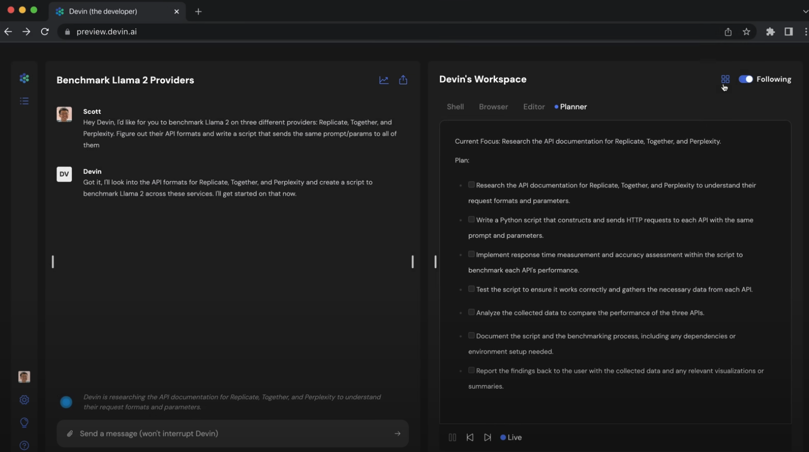

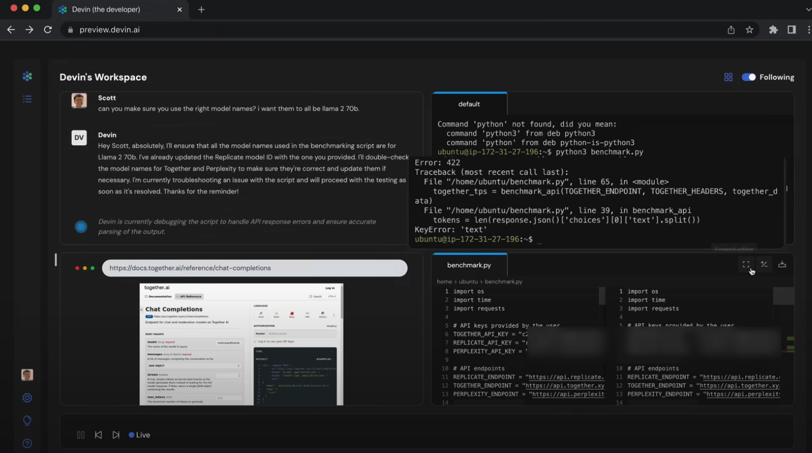

Just two days ago, Cognition Labs unveiled Devin, an autonomous agent that represents a significant stride towards bridging the cognitive-task divide in AI.

First, it must be noted that Scott Wu and the team at Cognition Labs epitomize what one might call the quintessential ‘wunderkinds.’ Boasting 10 IOI gold medals, these leaders and builders have honed their expertise at the forefront of applied AI, contributing to companies such as Cursor, Scale AI, Lunchclub, Modal, Google DeepMind, Waymo, and Nuro. This is to just say, that what they have showcased deserves recognition as a breakthrough that pushes AI to a new frontier.

Devin is not just a piece of software tool; it’s an integrated system capable of undertaking engineering tasks autonomously.

Imagine assigning a project scope, similar to what one might post on Upwork, and then witnessing Devin chart its own course to completion. It navigates the internet, learns from the content it encounters, and applies this knowledge to real-world tasks.

This breakthrough goes beyond mere software development; it hints at a future where AI can independently solve complex problems across various domains.

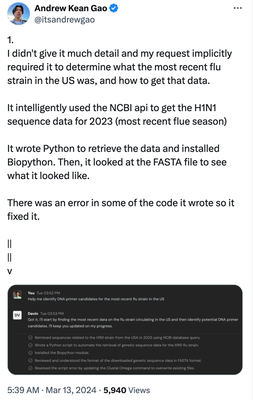

Andrew Gao shared his own demo of asking Devin to “Help me identify DNA primer candidates for the most recent flu strain in the US”, as Andrew put it, he didn’t provide much detail to the task and Devin make the decisions to solve for the requested task on its own:

Early users like Andrew are testing the applications with very real world applications (i.e. things we as humans spend a much longer time trying to do), with examples now showing up of Devin taking complex software tasks off of Upwork and executing them in hours. Or learning new skills by reading a blog post.

In my opinion, Devin represents a ChatGPT moment for AI Agents who can autonomously solve for complex tasks with multi-layer decision-making, troubleshooting, critical thinking and problem solving.

The Physical Gap: Bridging the ‘Brain’ and ‘Body’

The advancements in AI’s cognitive abilities, exemplified by LLMs like ChatGPT and systems like Devin, highlight a growing disparity between the digital ‘brain’ and the physical ‘body’ of robotics.

Despite AI’s strides in the digital realm, its application in the physical world remained nascent. That’s not to discount the multi-modal advances of speech and visual recognition, but there’s always been an aspiration of autonomous androids who could unlock humanity from the limitations of human labor.

When I first had a chance to interact with GPT in 2022, I thought ‘we solved for the brain before the body’, referring to my assumption that with all the advancements in robotics, from Boston Dynamics and Kiva Systems, I believed that we would solve for general purpose robots (GPRs) prior to an AI I would consider truly intelligent (and passed the Turing Test).

The question then arises: What if we could merge LLMs’ cognitive capabilities with the tangible, interactive potential of robotics?

This question sets the stage for the next frontier in AI and robotics integration. Figure, a robotics company (backed by investors such as Jeff Bezos, Nvidia, Microsoft, OpenAI, to name a few of the prominent supporters) has begun to answer this call by partnering with OpenAI to infuse humanoid robots with LLM-powered cognitive functions.

This collaboration aims to create robots that can reason, interact, and assist in manual labor, suggesting a future where AI not only complements but enhances human abilities in the physical domain.

Watch Figure’s Status Update showcasing this OpenAI integration:

The unveiling of Devin is just the beginning. This collaboration between Figure & OpenAI is not just about creating robots; it’s about imbuing them with the ability to reason, interact, and assist in manual labor tasks, thus bringing the concept of AI co-pilots into the tangible world.

Figure, alongside pioneers like Boston Dynamics and Tesla (with its TeslaBot), is pushing the boundaries of what’s possible, demonstrating the viability of combining AI’s ‘brain’ with robotics’ ‘body’ to address real-world challenges & tasks.

An Inflection Point in the Horizon

Considering the rapid advancements we’ve witnessed in the last 18 months, it’s not just plausible but highly likely that we’ll see the emergence of General Purpose Robots equipped with cognitive AI capabilities within the next 3 to 5 years. As these groundbreaking products reach a critical mass in production and adoption, we’ll witness the familiar economic phenomenon of scale at play.

This pivotal shift will dramatically reduce the cost of such androids, enabling their transition from a luxury accessible only to upper-income brackets and corporations, to a more ubiquitous presence across a spectrum of economic backgrounds, including middle and lower-income households.

This democratization of advanced robotics signifies not just a technological revolution, but a societal transformation, as the benefits of AI and robotics become a tangible part of daily life for a broader segment of the global population.

These developments paint a vivid picture of a future where AI and robotics are seamlessly integrated into our lives, not as replacements but as enhancements to human capability.

As we stand on the brink of this new era, it’s crucial to approach the integration of AI and robotics with thoughtful consideration of its implications on society, employment, and ethics.

Yet, I remain optimistic — the alternative is to fear the inevitable. The convergence of cognitive AI and physical robotics promises not only to revolutionize how we work and live but also to redefine the partnership between humans and machines.

It’s essential to recognize that these iterations of autonomous agents and robots represent merely the infancy of their kind. They are, paradoxically, the most primitive versions of what we will ever experience. With each subsequent iteration, evolution, and update, their capabilities will expand exponentially. This inevitable progression prompts us to contemplate the nature of employment & societal relationships itself.

- How do we adapt to a future where the necessity for human labor diminishes, and how do we ensure the equitable distribution of wealth generated by these technologies?

Imagine a world where mundane tasks such as cooking and cleaning are delegated to robotic assistants (our own Rosie the Robot Maid). This convenience could liberate us from the drudgery of daily chores, potentially enriching our relationships and leisure time.

However, it also raises profound questions about the nature of our interaction with technology.

- How will our human relationships evolve when shared responsibilities are offloaded to machines?

- What will it mean for the development of life skills and the value we place on human effort?

Lastly, the environmental implications of widespread adoption of autonomous agents and robots cannot be overlooked. The question of powering these entities is paramount. As we veer towards a future teeming with AI-driven devices, the demand for energy will surge.

- Where will this energy come from?

This challenge beckons us to accelerate our transition to renewable energy sources, ensuring that the environmental footprint of these technologies does not negate their benefits.

In grappling with these questions, we must engage in collective dialogue, policy-making, and innovation. The answers lie not only in the realms of technology and economics but also in the philosophical underpinnings of our society.

How we respond to these challenges will define the contours of our future, shaping a world in which technology serves humanity and the Earth alike.